Your browser doesn't support the features required by impress.js, so you are presented with a simplified version of this presentation.

For the best experience please use the latest Chrome or Safari browser. Firefox 10 (to be released soon) will also handle it.

Machine Learning

(Python edition)

Ed Toro

How do you teach a machine to learn?

- Input.

How do you teach a machine to learn?

- Input.

- Data structures.

[[1,2,3], [4,5,6], [7,8,9]]

How do you teach a machine to learn?

- Input.

- Data structure.

- Reduction.

How do you teach a machine to learn?

- Input.

- Data structure.

- Reduction.

- Inference.

Input: visitors to a "guess your weight" site

Data Structure: you can't record everything about a visitor

Reduction: you can't guess every value you've recorded

Average weight of all previous visitors: 150lbs

Inference: What can we infer from the data? What weight do we guess?

- The next visitor probably weighs as much as the average of all previous visitors.

- The next male visitor probably weighs as much as the average of all previous male visitors.

- etc...

Python Data Structures

- lists: [1,2,3,4]

- tuples: (1,2)

- dictionaries: {'haterz':2, 'lovahs':0, 'playas':2}

- nested combinations of the above:

[2, [2,3], (4,5), {'playa':'hatah'}] - serialized storage: databases, files, NoSQL, etc.

Request/Response Cycle - Feedback

- Receive user request

- Infer a response based on prior knowledge data structures

- Receive user request with feedback

- Combine user feedback with existing body of knowledge

- Reduce

Request/Response Cycle - Training

- Collect user data

- Build data structures

- Reduce

- Receive user request

- Infer a response based on prior knowledge

Simple example: Inference by mean average

- Input & Data Structure

knowledge =

retrieve_and_deserialize_data_from_datasource()

knowledge.append(user_weight)

- Reduction & Inference

from numpy import average

reduction = average(knowledge)

response.inference = reduction

The mean average "learns". When it's wrong, it adapts.

Faster simple example: Inference by mean average

- Reduction & Inference

prev_sum, prev_count = cache.get("key")

new_sum = prev_sum + user_weight

new_count = prev_count + 1

cache.put("key", (new_sum, new_count))

new_mean = new_sum / new_count

response.inference = new_mean

The knowledge set is big data. The ability to do incremental updates is key to machine learning performance.

Another simple example: genetic algorithm

best_guess = retrieve_most_successful_guess()

response.inference = best_guess

if we_guessed_correctly():

best_guess.success += 1

else:

best_guess.success -= 1

best_guess.save()

Use whichever guess is the most successful "in the wild". When a guess fails, reduce its fitness, allowing another best guess to possibly arise. The visitor has to let us know if we were correct.

Complex example: neural network

- Input & Data Structure

nodes = retrieve()

nodes[user_data] = nodes.get(user_data, 0) + 1

- Reduction & Inference

reduction = sorted(nodes, key=nodes.get)[-1]

nodes is a frequency chart. If 200 appears twice, nodes[200] == 2. The strongest node wins. It's the mode average of the knowledge set. It's just another averaging function!

This can get really complicated...

- K-Means Clustering

- combines means and neural network nodes

- clusters inputs into k buckets identified by k different mean averages

- infers the mean value of the bucket including or closest to the input

- Bayes Classification

- given some known probabilities, calculate some unknown probability, and infer from it

- e.g. given the probability that a site visitor weighs 150+lbs. (P(150+)) and that a 150+lbs. site visitor is male (P(M|150+)), calculates the probability that a male visitor weighs 150+lbs. (P(150+|M))

Good news - the machine learning algorithms have already been written for you.

Stop inventing "new" machine learning algorithms for your site. Just pick one!

OMGWTF! There are so many. Which one do I pick?

All of the above!

The Meta-Learning Algorithm

best_learning_algo = retrieve_most_successful_algorithm()

response.inference = best_learning_algo.guess(user_data)

if we_guessed_correctly():

best_learning_algo.success += 1

else:

best_learning_algo.success -= 1

best_learning_algo.save()

It's the genetic algorithm applied to other learning algorithms. Implement all the learners, store them somewhere, and order them by how successful they are.

The "best"? The "most successful"?

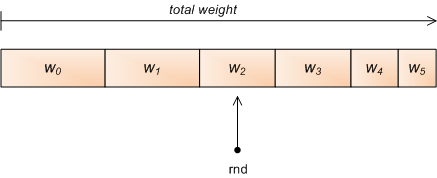

A "Bestest" algorithm - a weighted random choice

from random import uniform

algos = { 'kmeans': 50, 'mean': 25, 'bayes': 20, 'neural': 5 }

needle = uniform(0, sum(algos.values()))

for name in sorted(algos, key=algos.get):

needle -= algos[name]

if needle <= 0:

break

# return name

FAIL! All weights must be > 0 for that to work.

success = max(0, success - 1)

The algorithms may be free or cloud-cheap, but the data isn't.

Stop worrying about algorithms. It's the data, stupid!

There are two types of data

- Content

- Collaborative

- E-Commerce

- price

- color

- size

- Music

- genre

- beats-per-minute

- tempo

- Site pages

- main keywords

- length

It's data that describes the content on your site. When two pieces of content are similar, we infer a user who likes one will also like the other.

- gender

- age

- location

- favorite content

- friends

It's data describing the visitors to your site. When two visitors are similar, we infer that they'll like the same stuff.

Data needs to be numerical so you can do math on it.

- gender: { 'male': 0, 'female': 1, 'other': 2}

- employed: { 'false': 0, 'true': 1}

When possible, "numerification" should be meaningful. Labels that are related map to numbers that are closer.

- language: { 'en': 0, 'en-us': 1, 'en-gb': 2, 'es': 10, 'es-es': 11, 'es-mx': 12 }

- education: { 'some high-school': 0, 'high-school': 1, 'some college': 2, 'college': 3, 'masters': 4}

The fully numerified description of a piece of content or a visitor is its "DNA".

['gender', 'age', 'location_lat', 'location_long']

becomes

[0, 30, 26, -80]

The similarity score between two pieces of content is the "distance" between these two "points". The lower the score, the more similar the content.

import numpy

# 30, male, Miami

person1 = numpy.array((0, 30, 25.8, -80.2))

# 25, female, Ft. Lauderdale

person2 = numpy.array((1, 25, 26.1, -80.1))

score = numpy.linalg.norm(person1 - person2)

# score is approx 5

In numpy, the distance between the two points is the norm of the vector between those points. You may remember it better as the Euclidean distance as given by the Pythagorean theorem.

score2 = (a1-a2)2 + (b1-b2)2 + (c1-c2)2 + (d1-d2)2

Performance

Machine learning is a "big data" problem. Learn how those guys solve these kinds of problems.

(Hints: MapReduce - I called it "reduction" for a reason, Apache Pig, Apache Hadoop, etc.)

Say your website has 1 million songs. Imagine a grid with 106 rows and 106 columns. How many pairwise comparisons does that produce?

106 * 106 = 1012 (1 trillion*!)

*actually more like half a trillion if you ignore A vs. A and assume (A vs. B) = (B vs. A), but still...

Now add a collaborative analysis: every user is compared to every other user. More input!

- How long does it take to calculate all those scores?

- How many numbers make up each song's "DNA"?

- How many numbers make up each song's "DNA"?

- Where do you store the results?

- Hey database guy! You got room for 1 trillion rows lying around somewhere?

- Hey database guy! You got room for 1 trillion rows lying around somewhere?

- How quickly can you lookup a result at runtime?

- e.g. Generate the top 5 most similar songs.

- e.g. Generate the top 5 most similar songs.

- How often do you need to update your library?

- e.g. If you update weekly, but it takes two weeks to structure and reduce the data, you'll never catch up.

- e.g. If you update weekly, but it takes two weeks to structure and reduce the data, you'll never catch up.

Cluster the songs so that similar songs get grouped together. All songs in the same cluster are equally similar to each other.

e.g. If you have 1 million songs, group them into chunks of 100 songs each, leaving 10,000 song-clusters.

Algorithm options:

- hierarchical

- k-means

- self-organizing maps

Storage: You only have to store 10,000 cluster ids instead of 1 trillion sim scores.

Lookup: To find the songs most similar to any given song, randomly select other songs from the same cluster.

Updates: Calculate "average DNAs" for each cluster. Place the new song in the cluster whose average DNA is closest. For the 1,000,001st song, that's 10,000 comparisons instead of 1 million.

Johnny 5 can learn!

Thanks for listening!

@eddroid

github.com/eddroid

Google Images:

- http://www.schoolsignsonline.com.au/contents/media/34502-reduce-reuse-recycle.gif

- http://i0.kym-cdn.com/photos/images/newsfeed/000/039/080/5008_9c00_420.gif

- http://2.bp.blogspot.com/_g6jnLh81N64/TO48Xn1ZtnI/AAAAAAAAASA/MQHdSg2fQmI/s1600/Walking+skeleton.png

- http://www.coverbrowser.com/image/popular-library/158-1.jpg

- http://cache.jalopnik.com/assets/images/4/2008/06/lego_johnny_five.jpg

- http://www.retroist.com/wp-content/uploads/2011/07/johnny_5_is_alive_by_speedball0o.jpg

- http://www.schoolsignsonline.com.au/contents/media/34502-reduce-reuse-recycle.gif

- http://aux.iconpedia.net/uploads/1442736216.png

- http://blogs.villagevoice.com/runninscared/sstop.jpg

Google Images:

- http://profile.ak.fbcdn.net/hprofile-ak-snc4/162076_149773725075253_7380223_n.jpg

- http://4.bp.blogspot.com/_vd_zVJKziTE/TO_Ow5GbxoI/AAAAAAAAA8U/jbZ7VoM23yw/s400/mandy%2Bp.jpg

- http://obscureinternet.com/wp-content/uploads/Concert-Fail.jpg

http://eli.thegreenplace.net/2010/01/22/weighted-random-generation-in-python/

- http://eli.thegreenplace.net/wp-content/uploads/2010/01/subweight.png

We beat that SOPA/PIPA thing, right?

- Derived properties (e.g. entropy)

- Alternate distances (e.g. Hamming, Manhattan, maximum)

- User algorithm preferences